PRISM

Performance Restoration & Isolated Structural Modeling

Meet PRISM

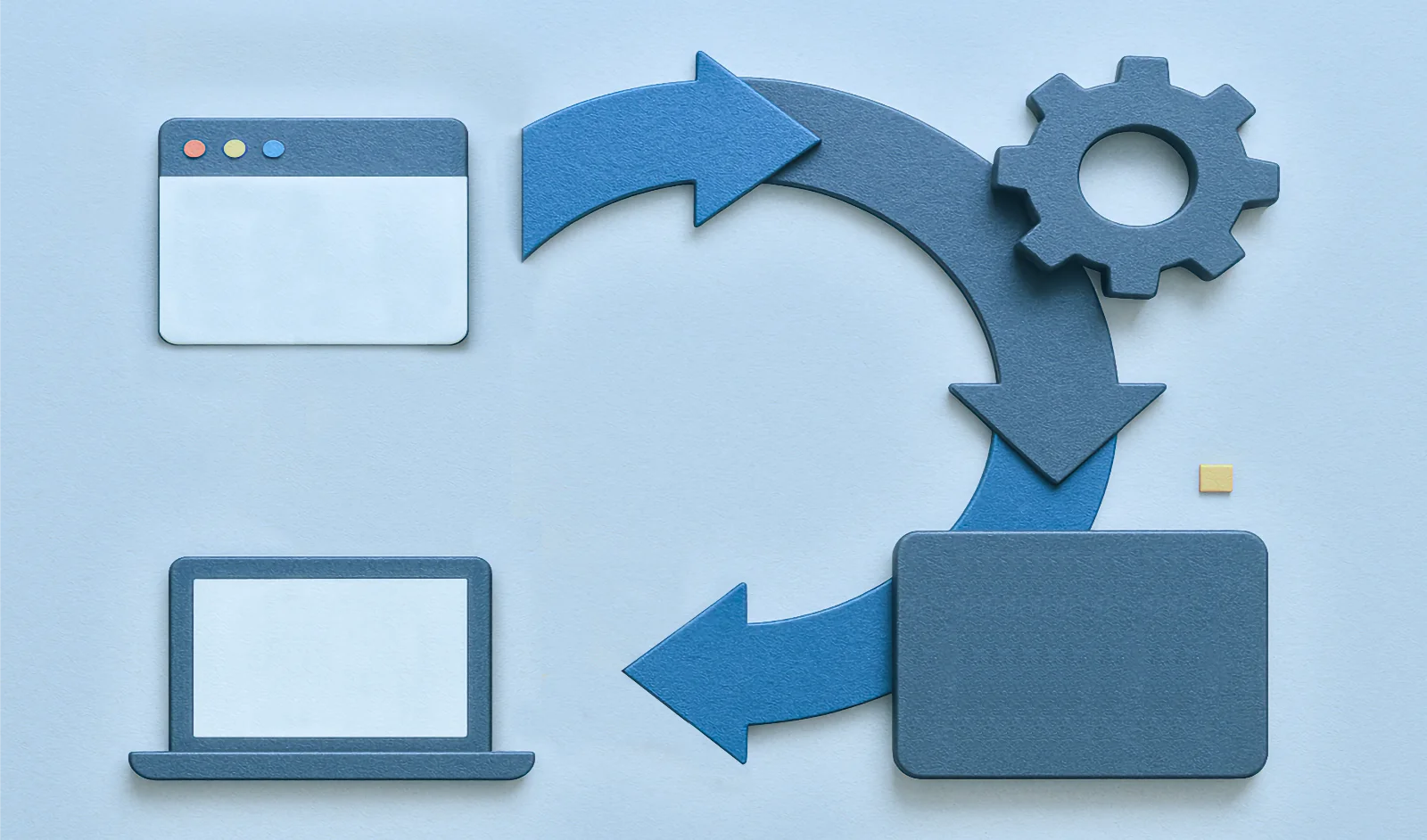

PRISM‘s extraordinary Audio to MIDI to Audio technology converts audio to MIDI—and using a dynamic virtual instrument, recreates the original audio on playback. MIDI notes can be edited, moved and gives users extraordinary new abilities in real-time. PRISM works inside DAWs, evolving traditional audio workflows to existing MIDI tools and audio plugins. And integrates PRISM’s native, conventional GenAI (Text to Audio and Audio to Audio) directly into the workflow—along with text-to-MIDI and MIDI-to-MIDI.

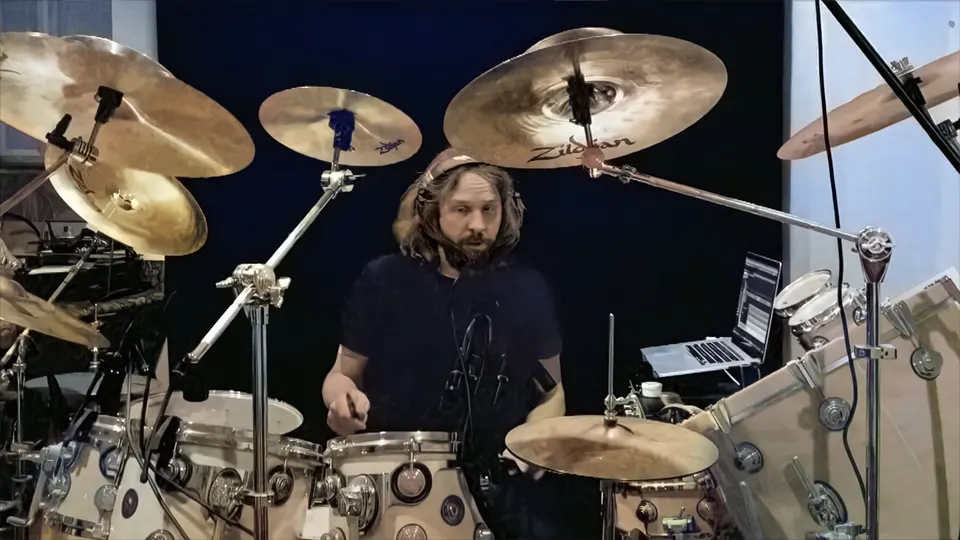

Year: 2020 Chad Wackerman & John Ferraro: Drums / Steve Morse & Blues Saraceno: Guitar / Sterling Ball: Bass

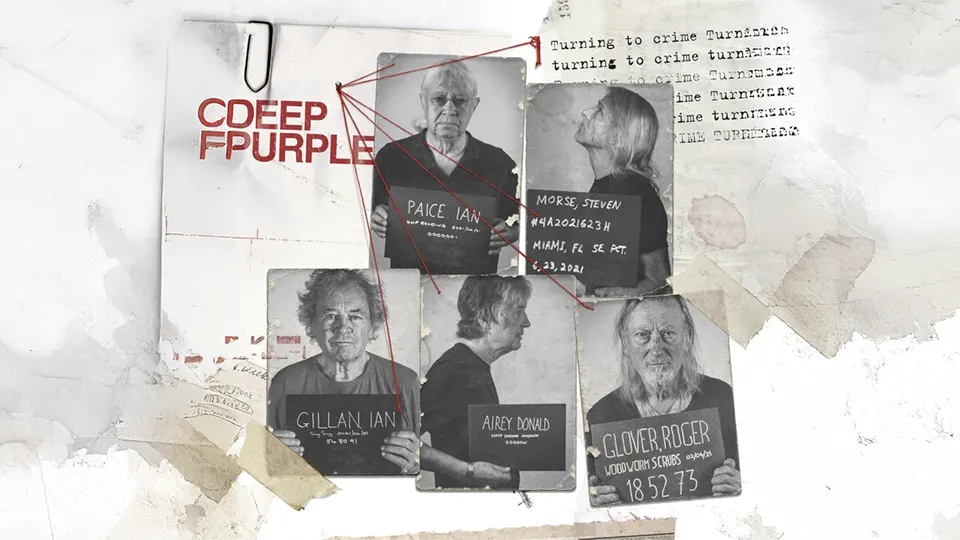

Since 2013, PRISM (and its forerunner, HPAR) has been used behind-the-scenes with dozens of iconic artists—for whom performances are critical. For Alice Cooper and Deep Purple, to Steve Vai and Crosby, Stills & Nash. In 2026, PRISM goes public.

Meet the Scientist

Bill Evans performed his graduate work at Manchester Metropolitan University and Glasgow University. His PhD thesis established the methodology of Performance Restoration, a set of technologies and techniques to repair musical errors in recorded music, and restore performers’ original intentions. The Virtual Audio Workstation was introduced in Evans’ alternate thesis. Based on this research, he has engineered tracks for dozens of iconic artists, including with his own band, Flying Colors.

Foreseen Consequences

PRISM allows acoustic audio recordings to be edited as a series of independent, temporal events. Each event is a superposition of every possible performance of that note. By analysing the surrounding events, the context of any specific event can be sufficiently determined to enable the corresponding audio to be determined, and then synthesised.

A subset of this process can be brought to Digital Audio Workstations. An encoding process analyses the recorded audio. It assigns semantic meaning and hierarchical representation to represent musical data, producing a MIDI track. And modelling data to represent the range of possible sounds, based on each note’s performance context. A PRISM-enabled Virtual Instrument then renders the sequence—or any version thereof—in real-time.

Year: 2020 Chad Wackerman & John Ferraro: Drums / Steve Morse & Blues Saraceno: Guitar / Sterling Ball: Bass

Foreseen Consequences

A problem manifests when raw audio is edited as MIDI. When notes are pulled apart, they expose Theoretical Audio—the parts of notes cut off by the next ones. PRISM generates the audio that would have existed, according to the performance parameters it predicts.

Split Personality

PRISM divides acoustic recordings into performances and the sounds they made. Each can be edited independently using standard DAW tools (for processing MIDI and audio). Together, new classes of sound transformation are enabled to solve canonical problems with traditional music production.

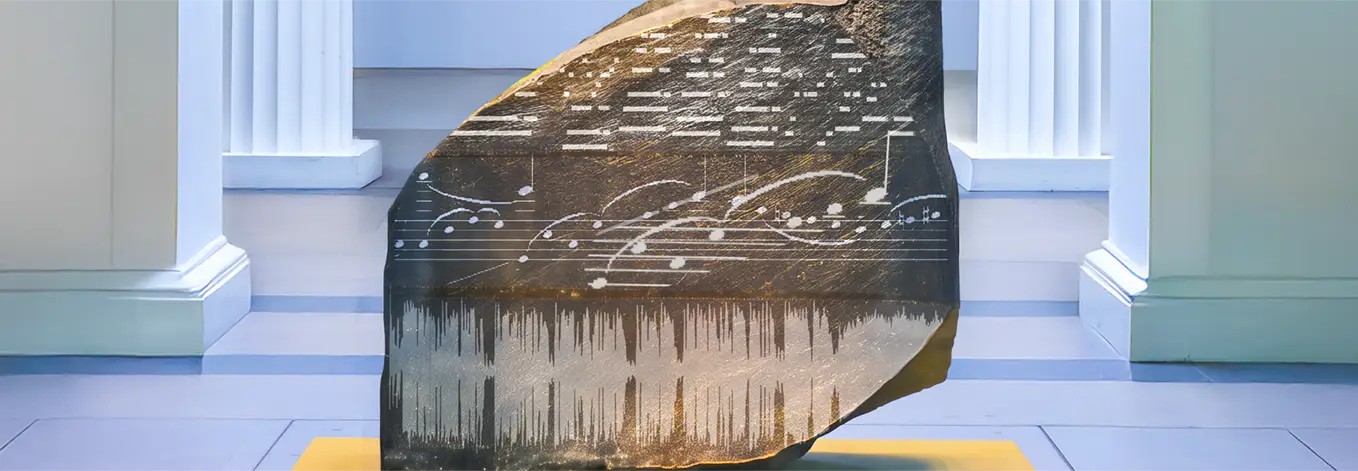

In this video, PRISM translates an audio loop to MIDI. Using features of PRISM’s Performer and Transformer system, the performance, instrumental physics, ambience, and other elements are edited separately. The DAW‘s native editing capabilities are complemented by PRISM‘s own unique transformations.

Third Degree Burn

PRISM‘s third iteration strategically integrates AI according to a roadmap begun in 2015, and concluding in 2029. While other systems are just discovering generative sound, PRISM has been creating hits with it for over a decade.

Features that are new to the industry have been evolving strategically in PRISM, so the larger technical landscape of 2026 plugs right into PRISM where it was intended. Our training data isn’t harvested—PRISM audio can be copyrighted. And artists control the use of their likeness, receiving royalties for its use.

Meanwhile, everyone else seems to be inventing things as they go along…like that one that rhymes with, you know.

Magic. Promptly.

PRISM Version 2 added the unprecedented ability to losslessly convert audio to MIDI. Version 3 integrates traditional, prompt-based genAI audio tasks into PRISM’s decoding process. It enables the unique synergy of absolute control to generative audio. PRISM’s training data is ethically-sourced and copyrightable, with all processing performed locally on the user’s computing device, or in the cloud.

The Encoder provides the universal entry point for audio files into PRISM.

PRISM's Odomiser composes copyrightable arrangements (e.g. string parts) based on the music in the input audio file. Users describe their arrangement with standard text prompts.

Generative audio composition in PRISM uses prompt-based, text-to-audio compositions. Users can request music in the style of licensed musical artists and the user's own repetoire.

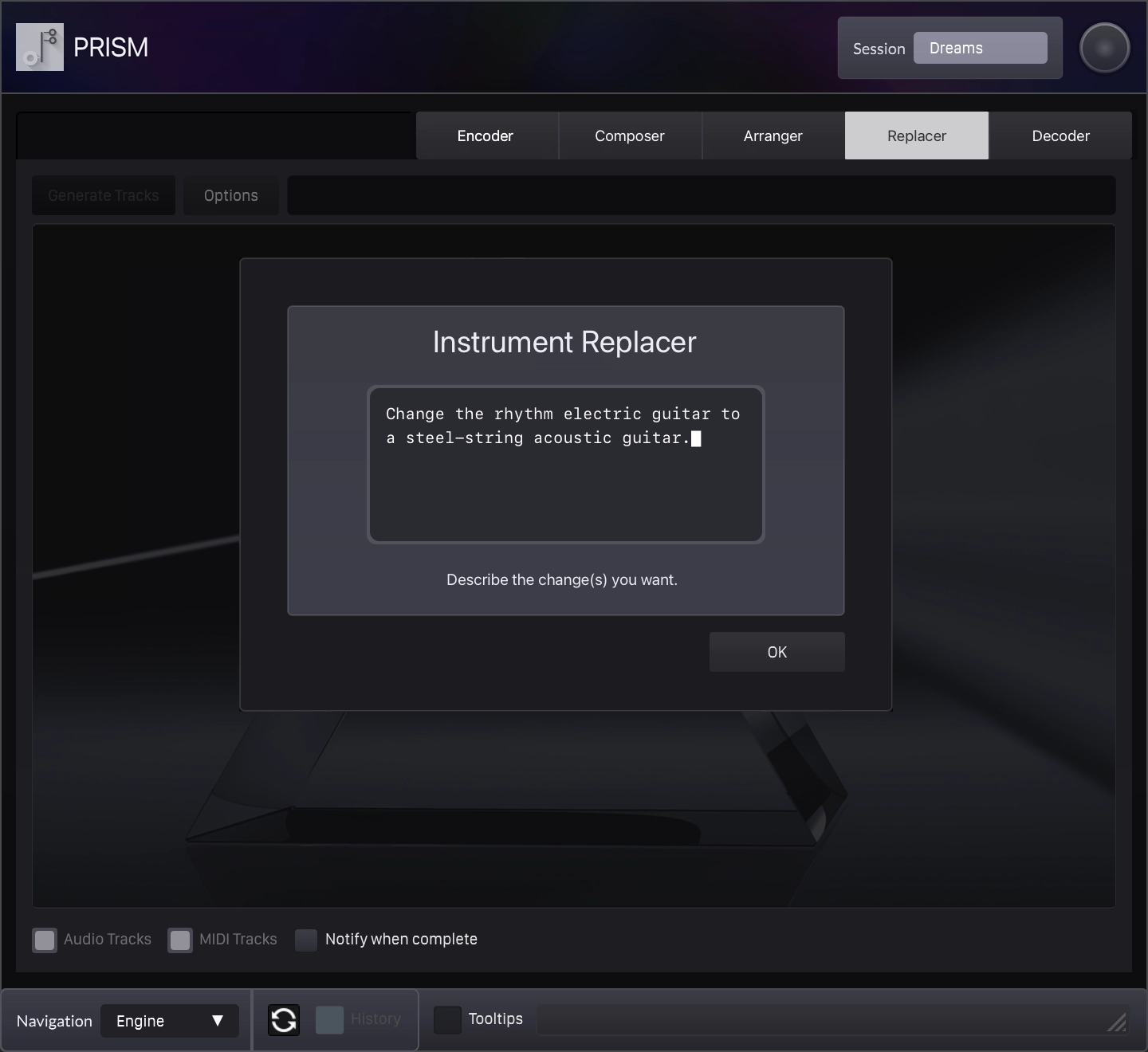

PRISM's take on Covers enables instruments to be replaced, as well as genres. As with all of PRISM's synthesis, all of the audio data is losslessly converted to MIDI, with playback through PRISM's dynamic virtual instrument.

After processing and analysing an audio file, PRISM reconstructs the performance as either rendered audio or lossless MIDI with a generative virtual instrument.

High Profiles

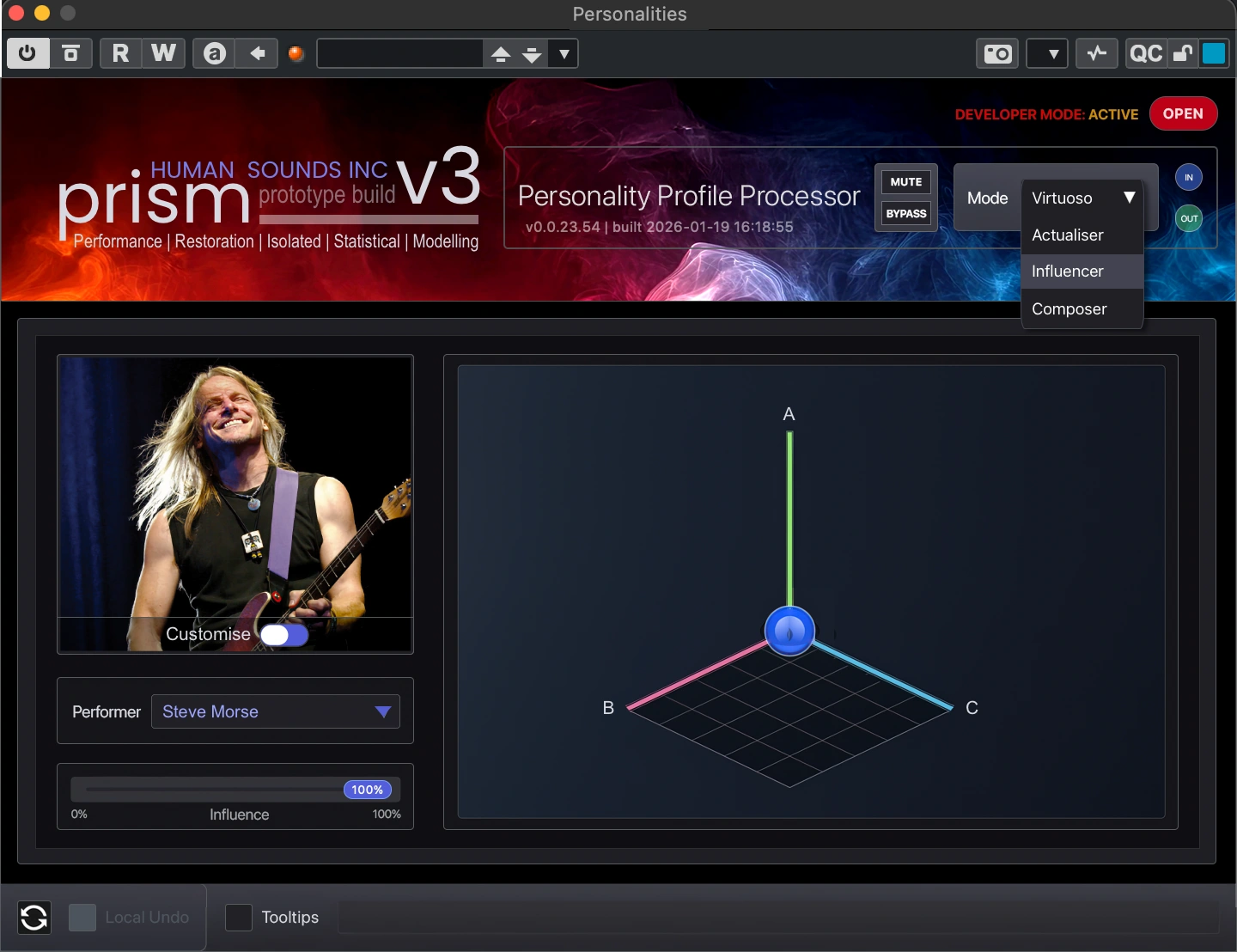

PRISM represents all performances as discrete events, described by high-level meta data that parameterises many characteristics of human performance. PRISM’s Personality Profiler combines the power of LLMs with the specificity of MIDI, with copyrightable output.

Evans invented Performance Restoration as a science in 2015: the automatic correction musicians' performance errors. It is the opposite of quantizing; PRISM's Virtuoso module corrects errors musically, based on stylistic elements of artistic performance.

PRISM's Actualiser module makes performance error-correction personal, by repairing musical mistakes according the user's own performance style. It helps fulfill the ambition of all musicians—to show the best version of themselves.

Evans pioneered stylistic imprinting in 2015 by correctly predicting a missing 7-second gap in a solo by guitar legend Steve Morse. PRISM's Influencer module lets users variably apply the musical flavor of officially licensed artists to their own performance. At 100%, the module doubles as a Featured Artist.

PRISM's Composer module provides generative composition, with flexibility of MIDI, in the style of officially licensed musicians. Or, it generates tracks in the style of the user's own repertoire.

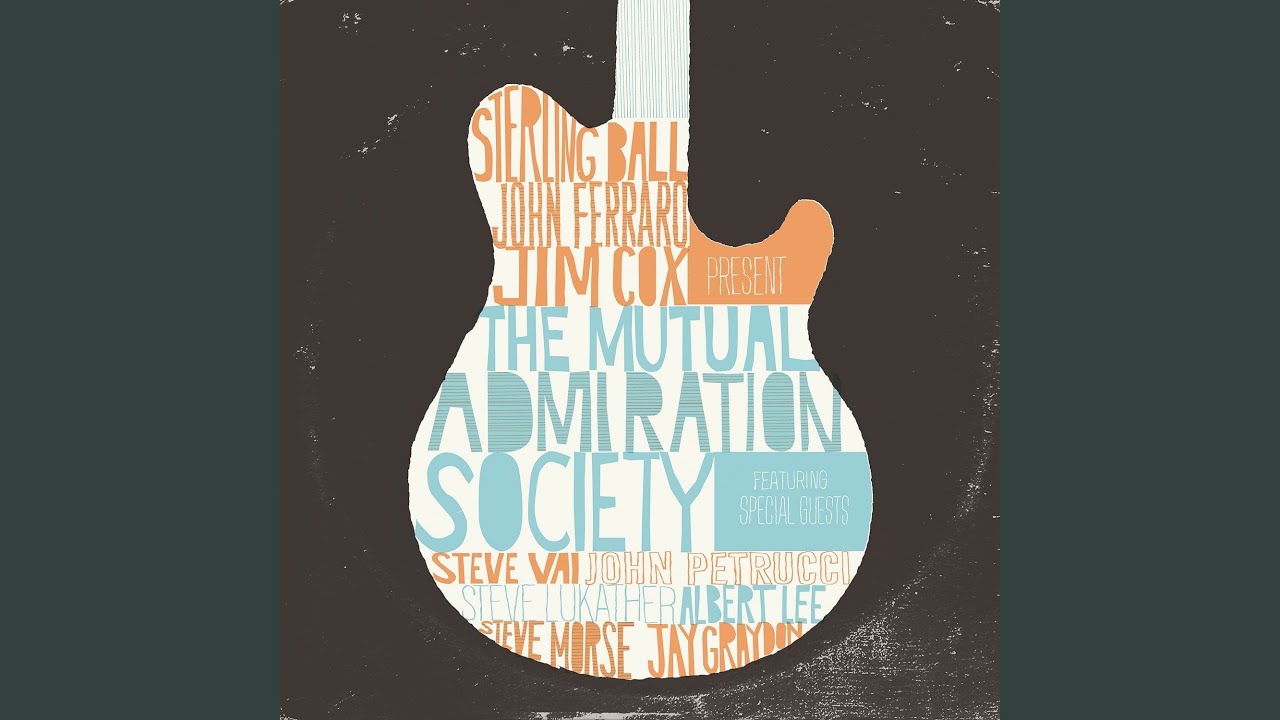

Selling Out

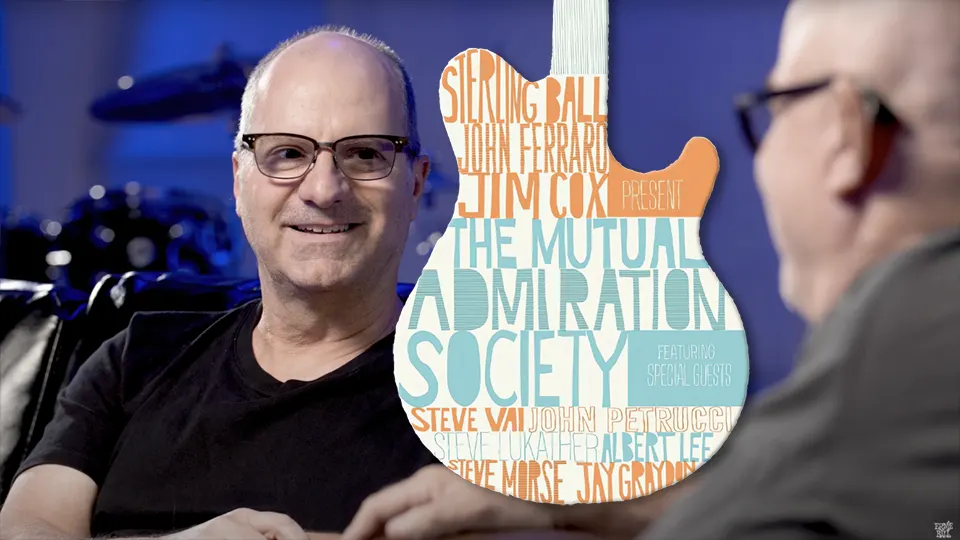

Hear PRISM on tracks with iconic artists, engineers and producers, engineered by Evans. Live songs are from the original audio—no overdubbing, re-recording or sample-layering.

Steve Vai ▪ Steve Morse ▪ Mike Portnoy ▪ Neal Morse ▪ Alice Cooper ▪ Jay Graydon ▪ David Foster ▪ Van Romaine ▪ Albert Lee ▪ Sterling Ball ▪ Joe Bonomassa ▪ Angel Vivaldi ▪ Chad Wackerman ▪ John Ferraro ▪ Marco Minneman ▪ Jim Cox ▪ Steve Lukather ▪ Crosby, Stills & Nash ▪ Peter Frampton ▪ Peter Collins ▪ Ken Scott ▪ Bob Ezrin ▪ Michael Brauer ▪ Mark Neeham ▪ Howie Weinberg ▪ John Petrucci ▪ Deep Purple

PRISM Songs/Albums

2:40

8:46

5:07

5:11

6:05

4:35

4:35

5:10

2:30

3:08

3:42

11:55

5:12

5:50

5:10

6:48

3:56

3:10

6:18

2:38

4:30

3:33

3:51

4:02

5:10

6:20

Year: 2021

PRISM Usage: Steve’s guitar

Year: 2019

PRISM Usage: Everything

Notes: GRAMMY Award

Year: 2015

PRISM Usage: All Instruments/Vocals

Notes: No Re-Recording • Chart Position Coming

Year: 2025

PRISM: Guitar

Notes: Nov 14, 2025 Album Release

Year: 2018

PRISM Usage: Drums

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2023

PRISM Usage: Alice’s vocals

Notes: No Re-Recording • Gold Record

Year: 2014

PRISM Usage: All Instruments/Vocals

Notes: Top-20 Chart Position

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2012

PRISM Usage: All Instruments/Vocals

Notes: #9 Billboard Hard Rock

Year: 2012

PRISM Usage: All Instruments/Vocals

Notes: #9 Billboard Hard Rock

Year: 2019

PRISM Usage: All Instruments/Vocals

Notes: #11 Chart Position

Year: 2019

PRISM Usage: All Instruments/Vocals

Notes: #11 Chart Position

Year: 2019

PRISM Usage: All Instruments/Vocals

Notes: #11 Chart Position

Year: 2015

PRISM Usage: All Instruments/Vocals

Notes: No Re-Recording • Chart Position Coming

Year: 2025

PRISM Usage: Steve’s guitar

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2012

PRISM Usage: All Instruments/Vocals

Notes: #9 Billboard Hard Rock

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2018

PRISM: Everything

Notes: #1 iTunes and Amazon Blues Charts

Year: 2025

PRISM: Guitar

Notes: Nov 14, 2025 Album Release

Year: 2013

PRISM Usage: All Instruments/Vocals

Notes: No Re-Recording • #1 Chart Position

Year: 2019

PRISM Usage: All Instruments/Vocals

Notes: #11 Chart Position

Stay in the Loop

You will first receive an email to confirm you signed up. We do not use email for commercial purposes, or share with third parties.

Stay connected with PRISM, and get an inside look at the sessions from some of music’s most compelling artists. New technologies while they’re in the incubator. And fresh takes on how the science of music production affects us as engineers, producers, artists and humans.