Introduction

Dr. Evans introduced the Virtual Audio Workstation in 2015, a bold reimagining of music production based on AI and the physical manifestation of music. His submission included a hand-built, unencumbered 3D display, including the first Volumetric Haptic Display (capable of creating haptic voxels in free-floating space). While the industry catches up, PRISM brings a substantial subset of those capabilities to modern DAWs. While PRISM has the optics of a commercial product, it is (at this time) used only by Evans’ production company.

On the Record

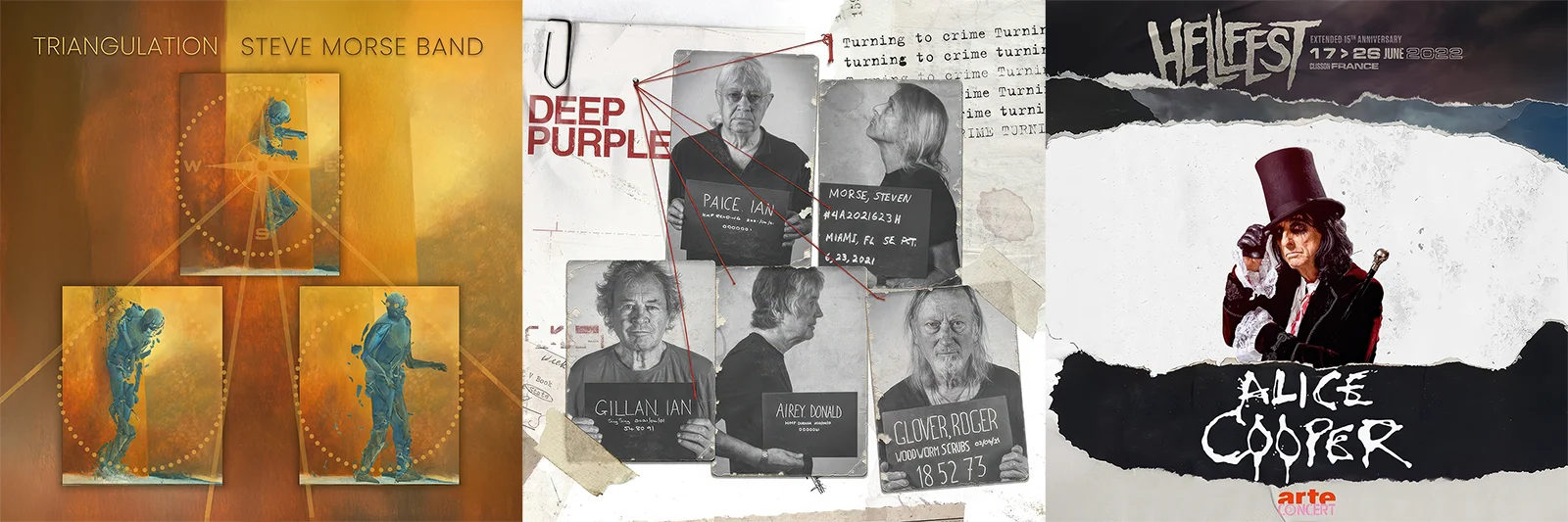

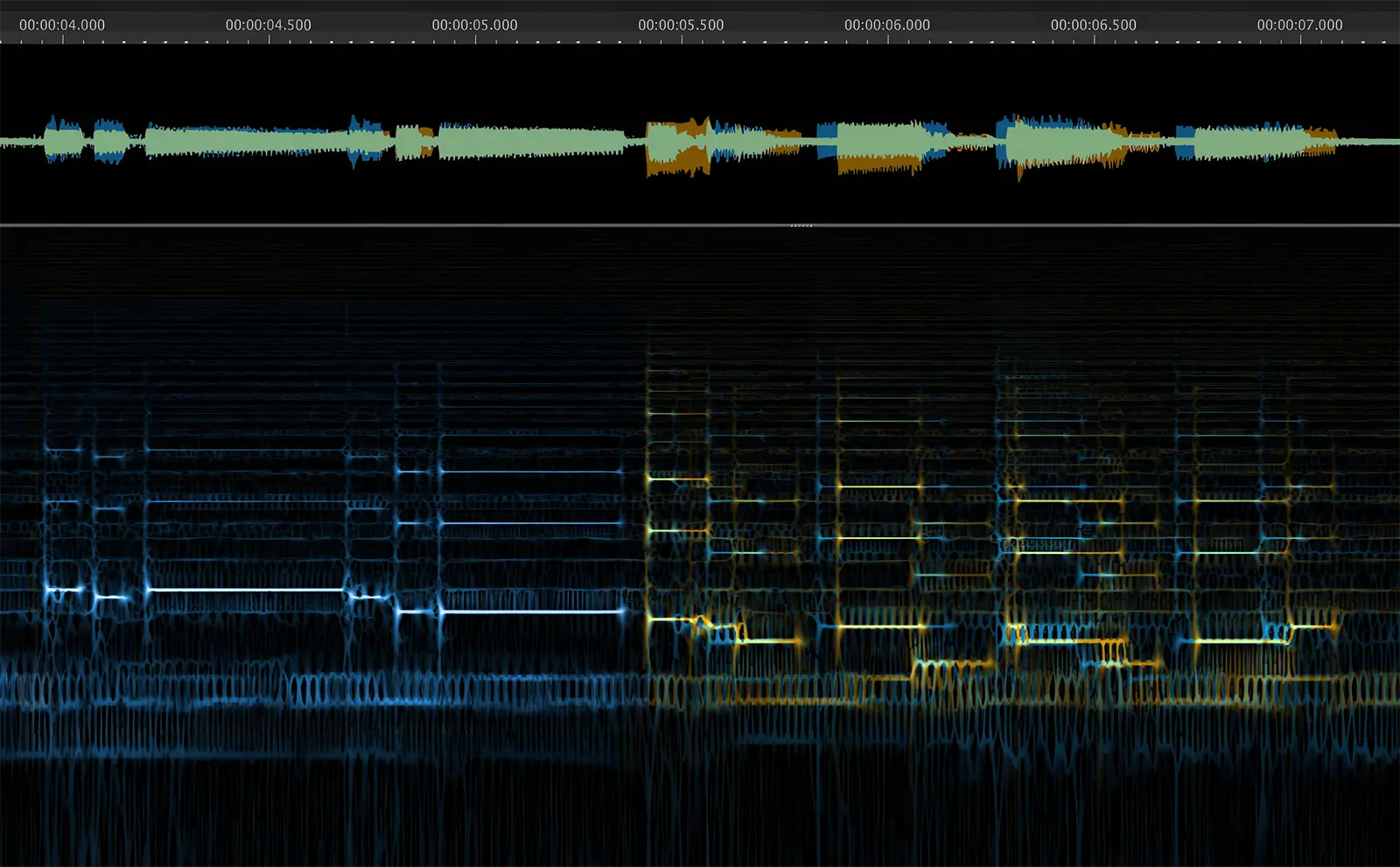

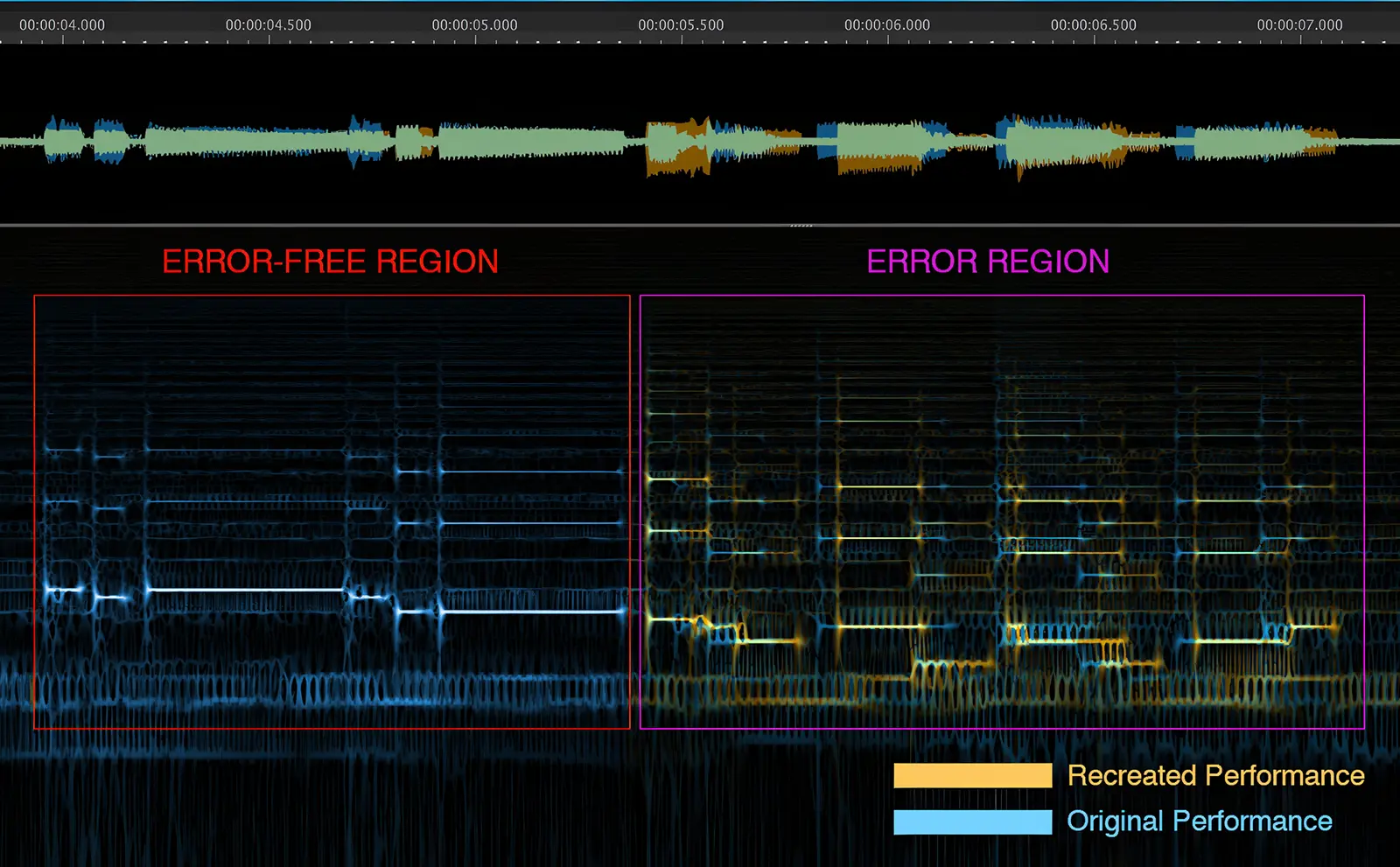

It was first used commercially in 2013 on the #1-charing Flying Colors album, Live in Europe—and then in full on Live at the Z7 (2015). The original test was a 7-second dropout from a recording of Steve Morse’s guitar solo. PRISM correctly predicted what he would have played, and then created the precise audio that would been recorded. Upon hearing it, the 7-GRAMMY nominee observed that he could not tell what part of his solo had been replaced. Since then, PRISM has been continuously developed, and employed on tracks with dozens of prominent artists.

Noteworthy Events

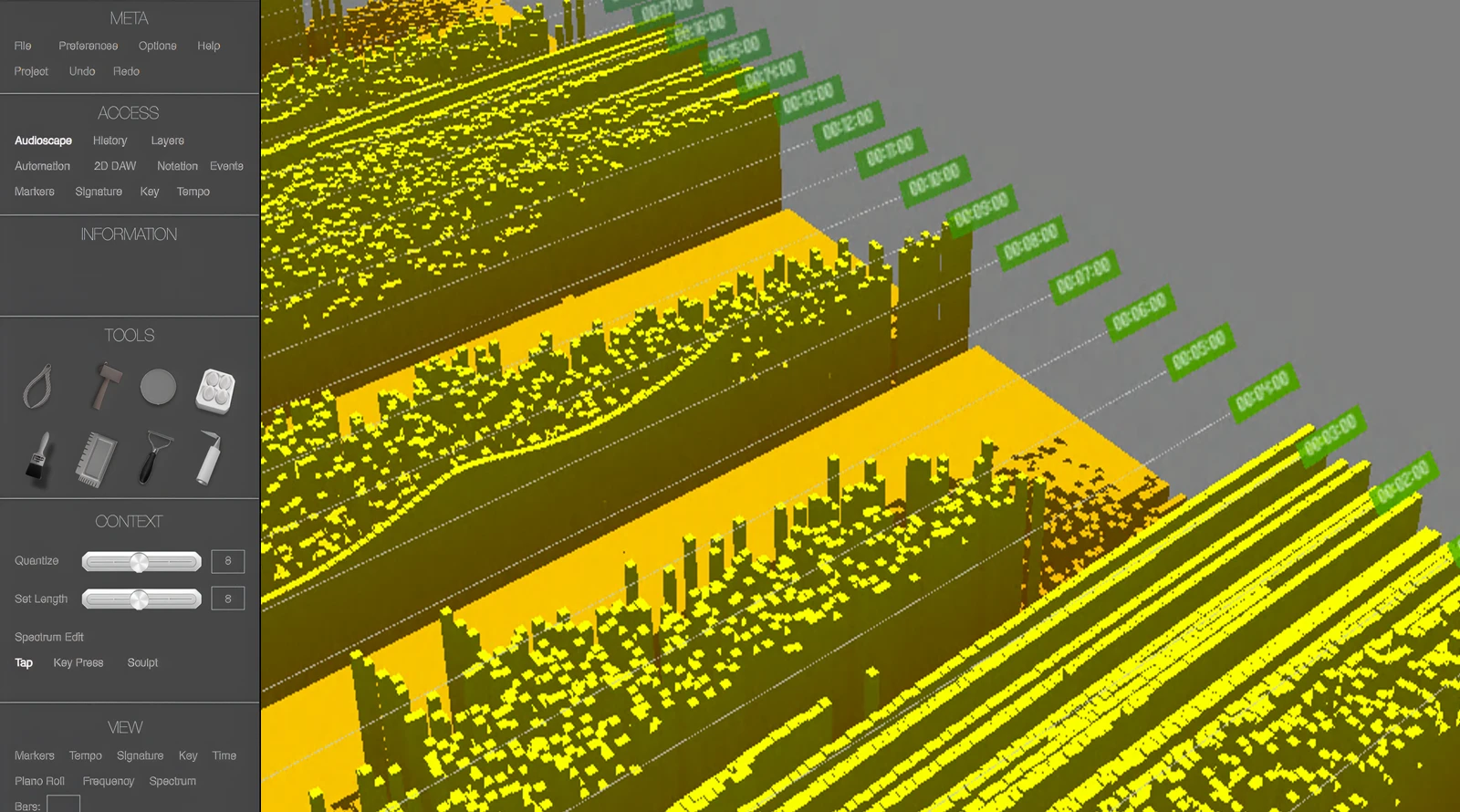

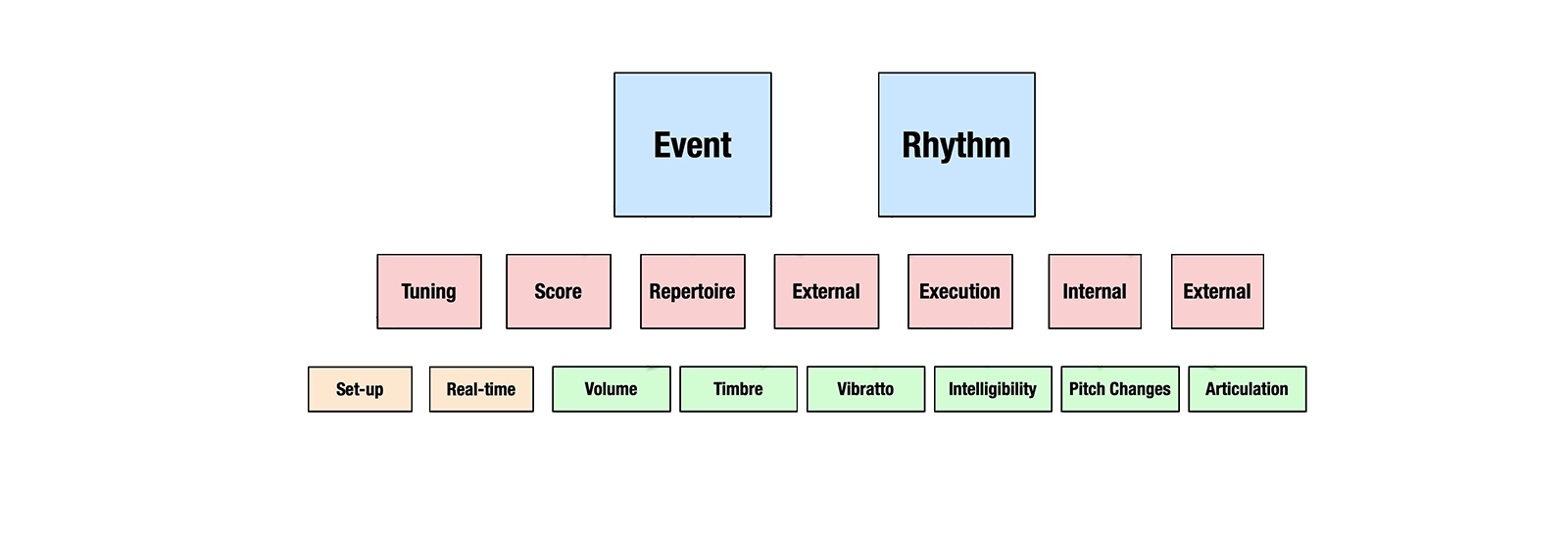

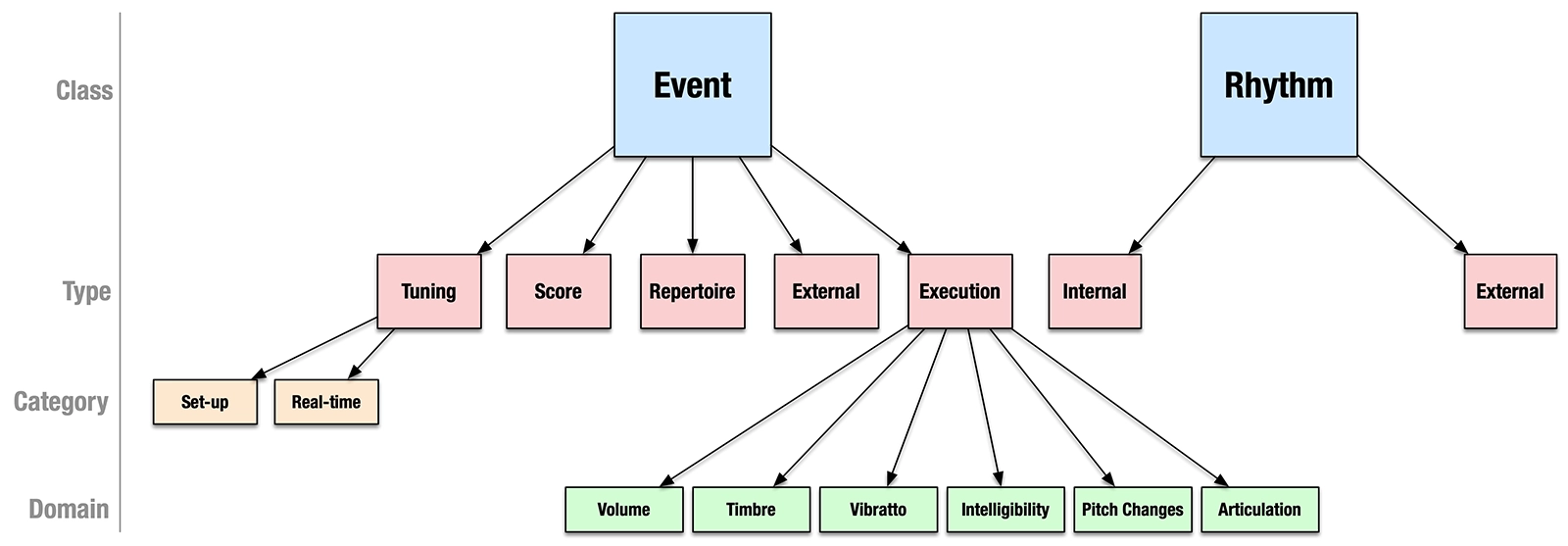

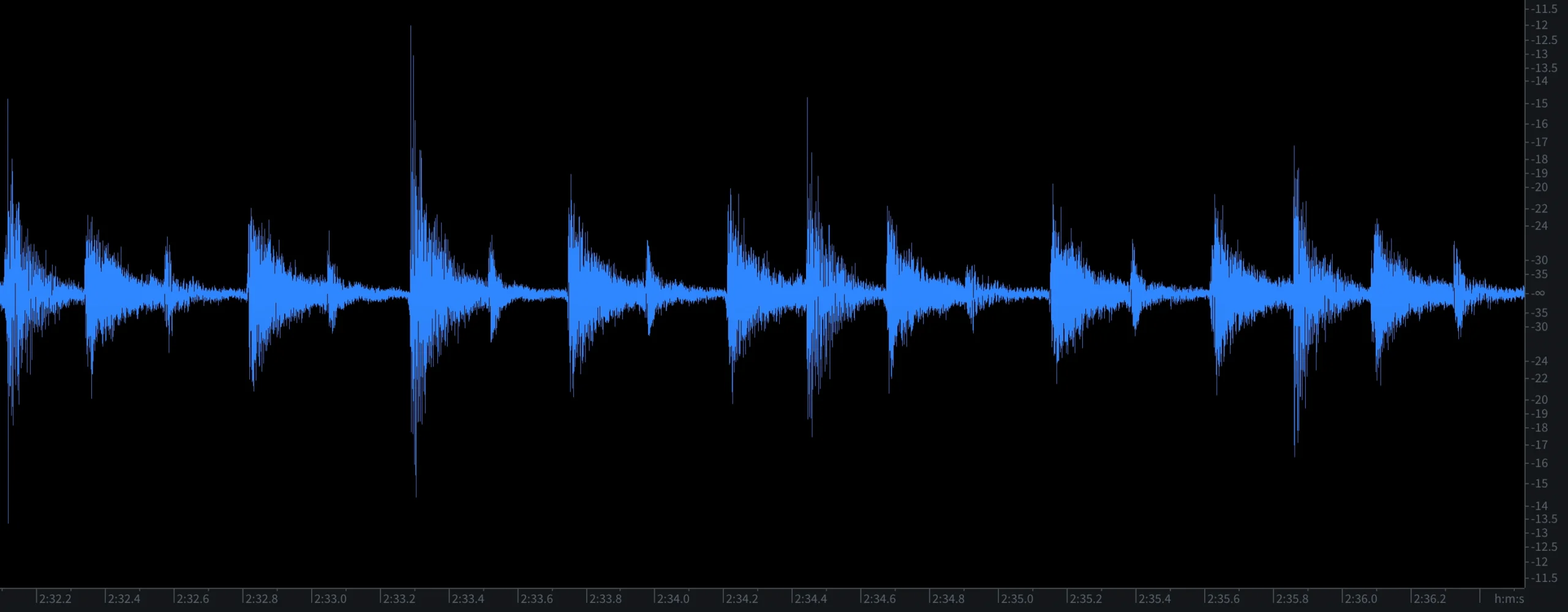

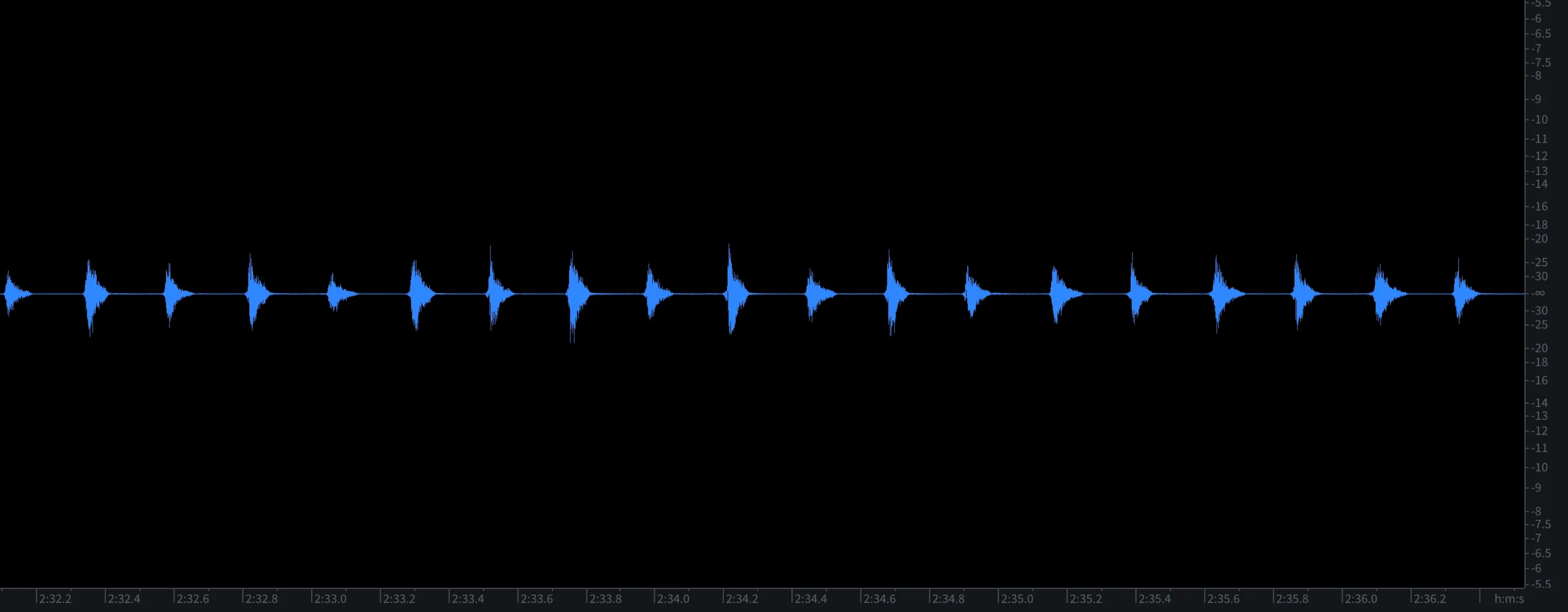

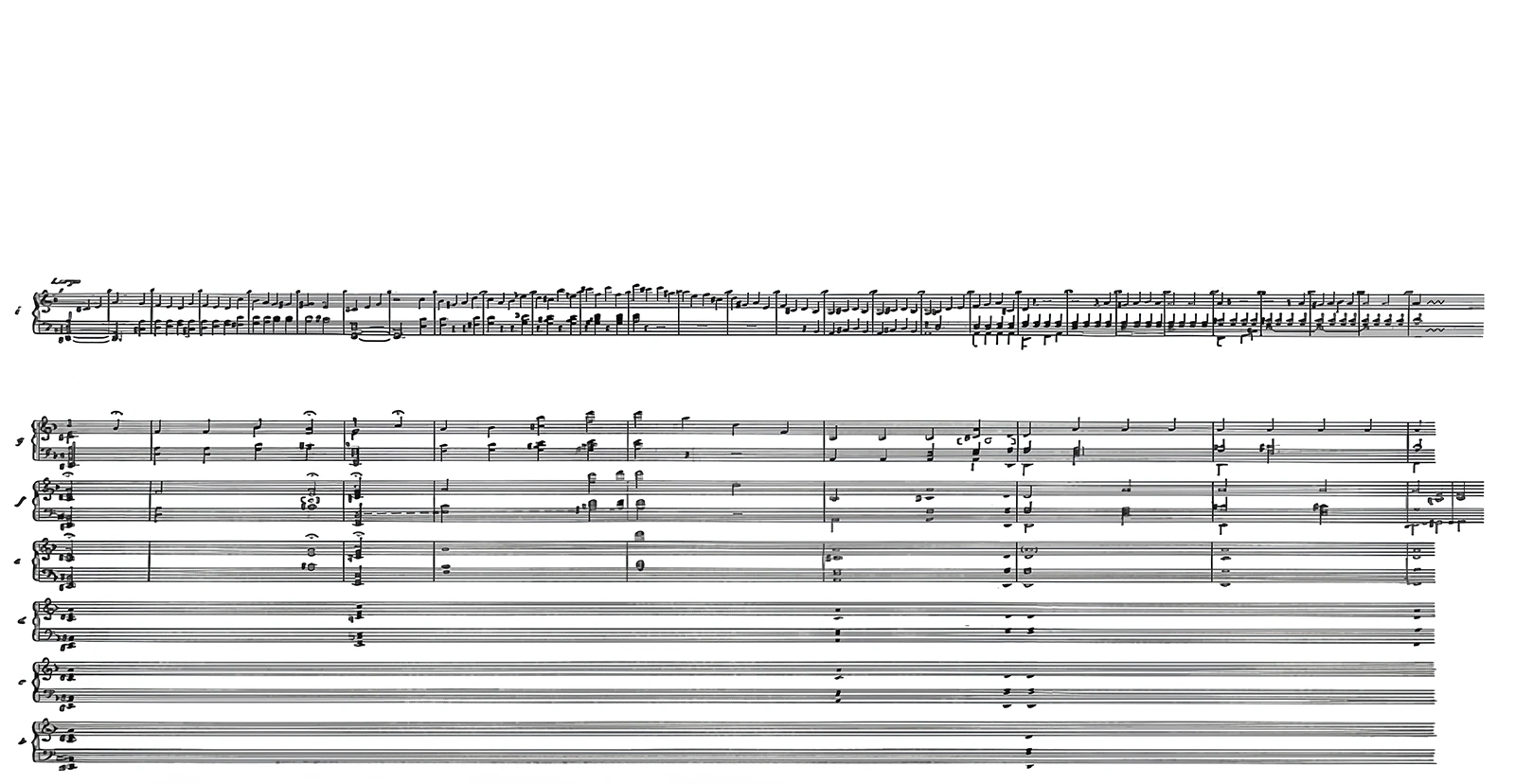

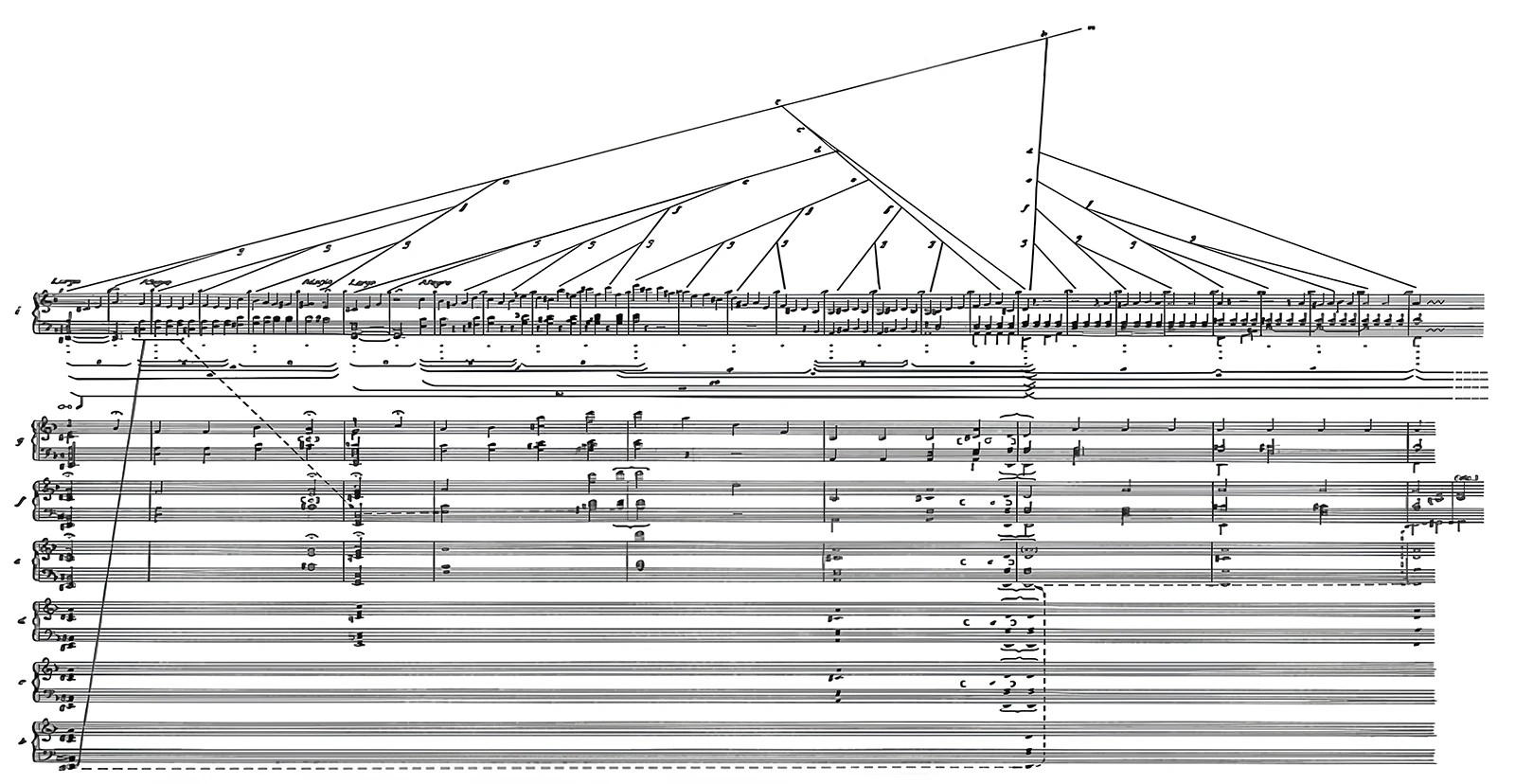

PRISM converts audio tracks to MIDI tracks, uniting audio and MIDI into rich-data MIDI tracks. This simplifies many aspects of music production, unites previously-incompatible operations, and increases their power.

High on Data

The implementation of PRISM adds intelligent, high-level data to these MIDI tracks, imbuing them with semantic meaning. This enables users to edit and mix audio by directly changing the properties they want, instead of trying to first transform audio and MIDI to have these properties (and then alter them). It also facilitates the isolation and independent editing of complementary information pairs, such as sound and performance, and souce signal and ambience.

Natural Intelligence

PRISM also provides a strong, uniform and reliable foundation to deeply integrate artificial intelligence into traditional music production practice. And to provide the foundation for new methodologies. More advanced semantic meaning, and a single event-driven data type, provides explicit feature engineering, allowing the functions of artificial intelligence to be more directly controllable as user-level parameters. It also enables the introduction of new classes of higher-level machine tasks, and systemic use of mature Large Language Model data sets and algorithms.

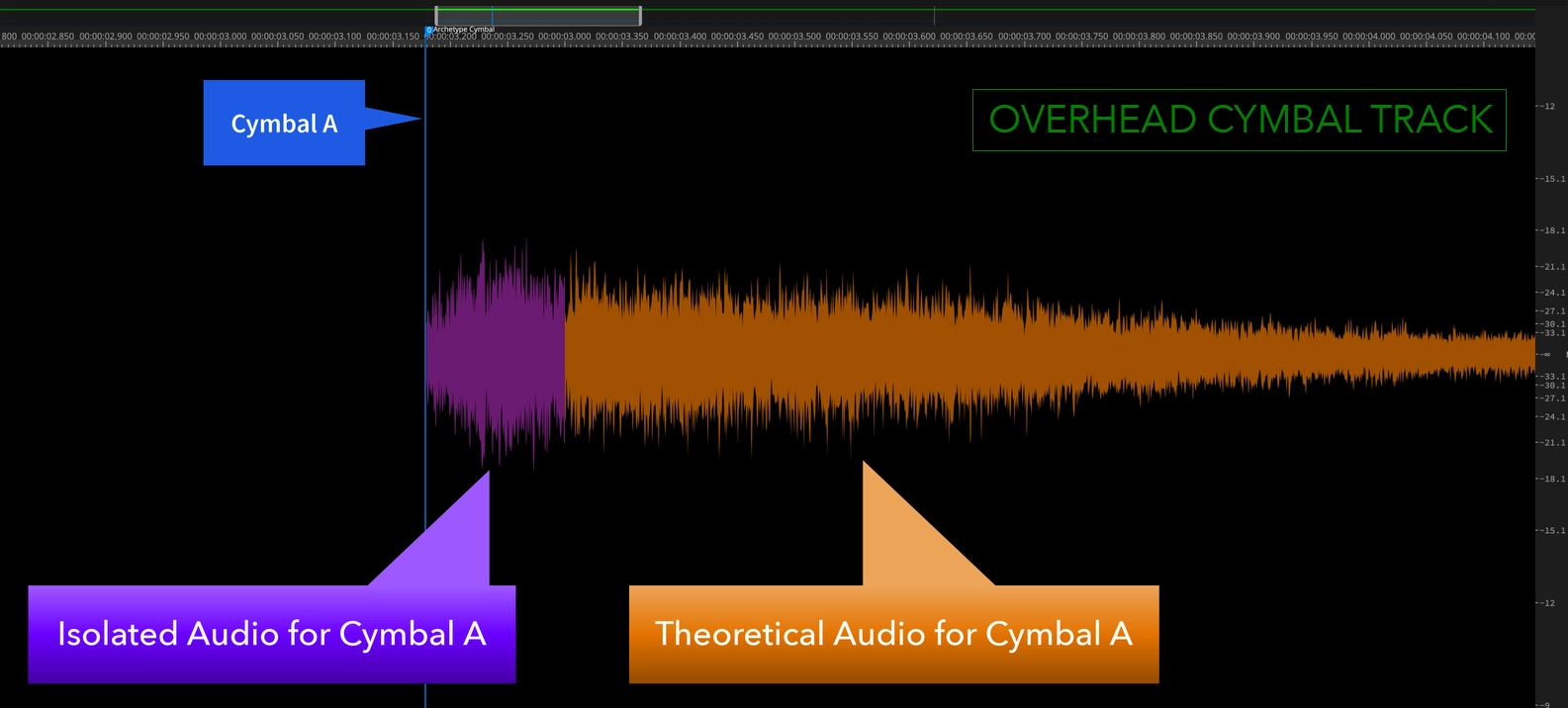

Sound Theory

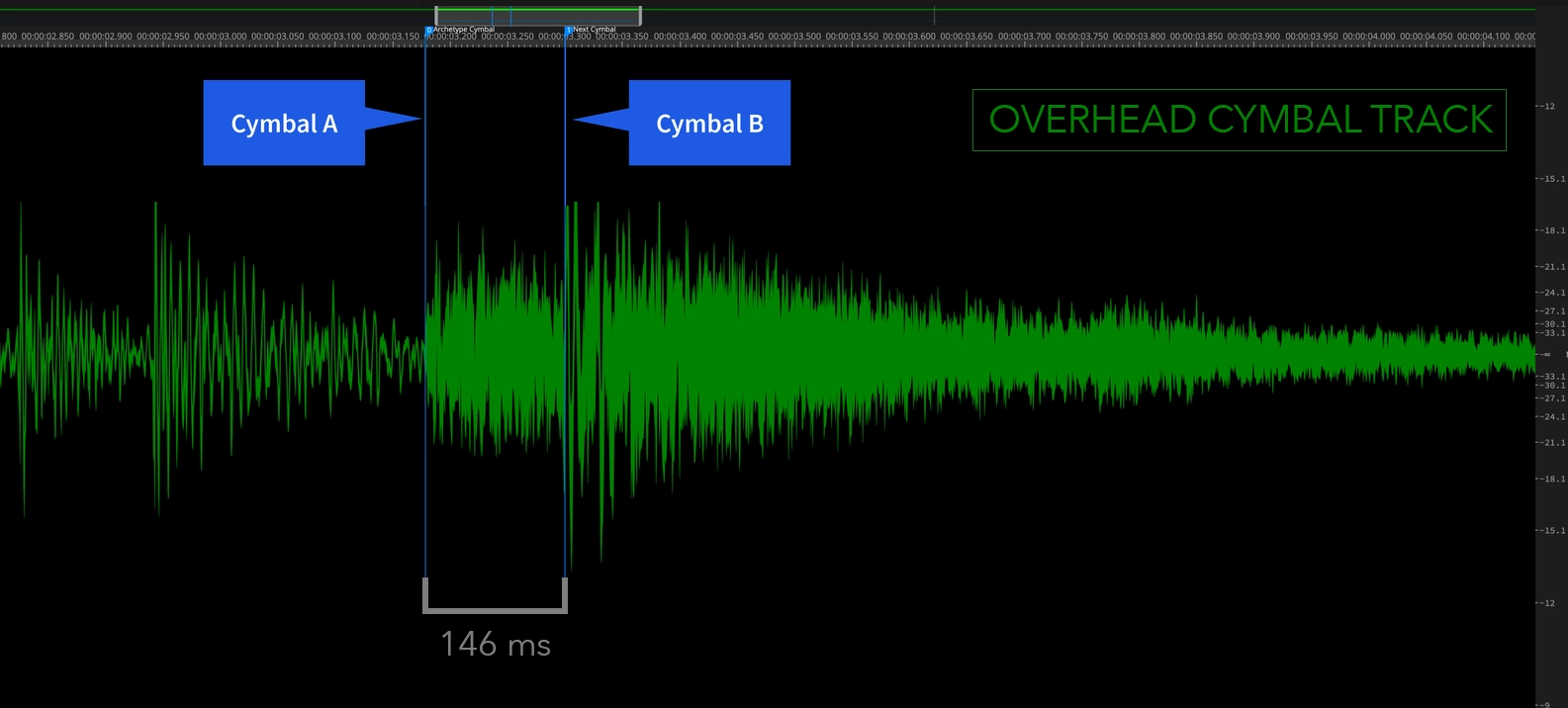

Audio to MIDI conversion requires the synthesis of sound that was never recorded. When two notes are pushed closer together, a new transition between them must be rendered, correctly. When notes are pulled apart, they expose regions of silence. PRISM generates this audio beyond traditional GenAI prediction—it requires authenticity, physical precision, phase-coherence. It also requires that the new information be the result of the note’s performance context—based on the notes surrounding it. In PRISM terminology, the new sound is called Theoretical Audio. And PRISM must render exactly the same audio, every time the preconditions are the same.

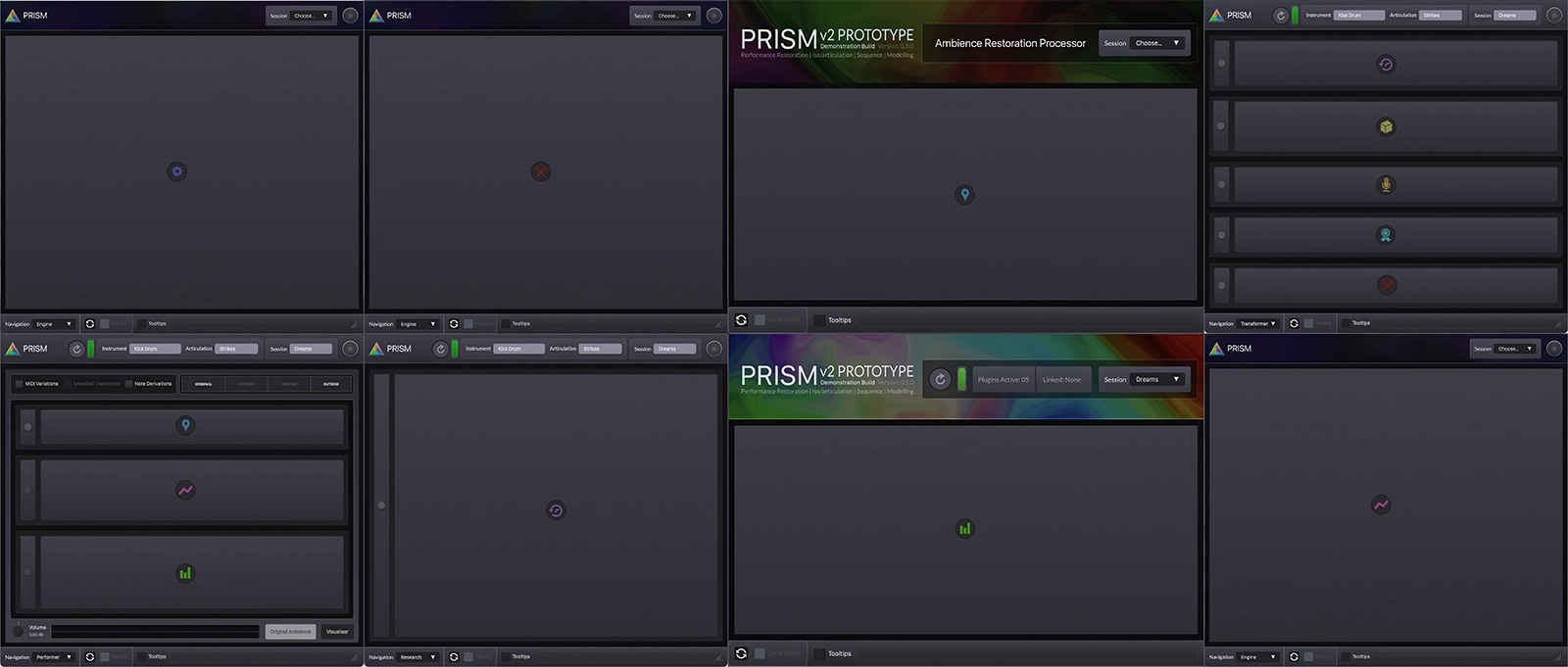

A Suite in Five Parts

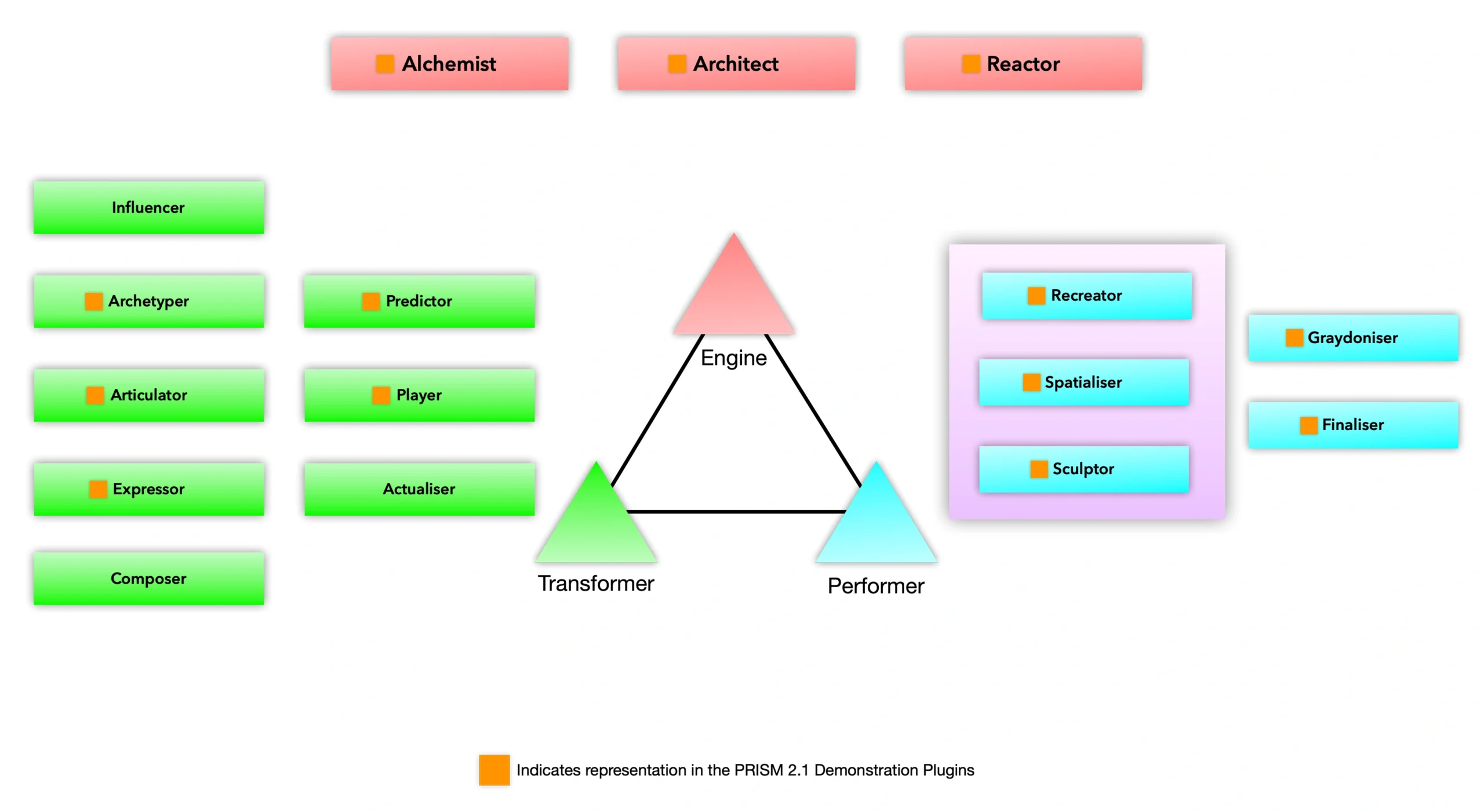

Version 2 of PRISM introduced a suite of five Instrument and Effect plugins that integrate tightly into DAWs. The suite transforms DAWs’ fundamental paradigms by recontextualising their existing functionality. This transformation is implemented while maintaining full compatibility with existing DAW practice—operations, workflows, audio plugins, mixing techniques and other production elements. Additionally, the plugins elevate and enhance many areas of traditional DAW use, solving and easing longstanding problems.

Solitary Refinement

PRISM’s Engine plugin converts source acoustic audio tracks to Archetype Audio—a WAV file modelled specifically for DAWs. Regardless of the source material, Archetype Audio is completely isolated by instrument, separated by articulation, devoid of noise, extended in dynamic range, enhanced with additional detail, and removed of all ambience. Evans first deployed it on Flying Colors, Live at the Z7; Wikipedia documented that, “Critics place it as one of the best-sounding live albums ever made.”

Super Model

PRISM models each instrument, and each note, by re-synthesiing the deconstructed track data. This enables the real-time modification of both physical and artificial parameters, such as shell resonance and sustain time. PRISM models each instrument, and each note, by re-synthesiing the deconstructed track data. This enables the real-time modification of both physical and artificial parameters, such as shell resonance and sustain time.

Time Travel Agent

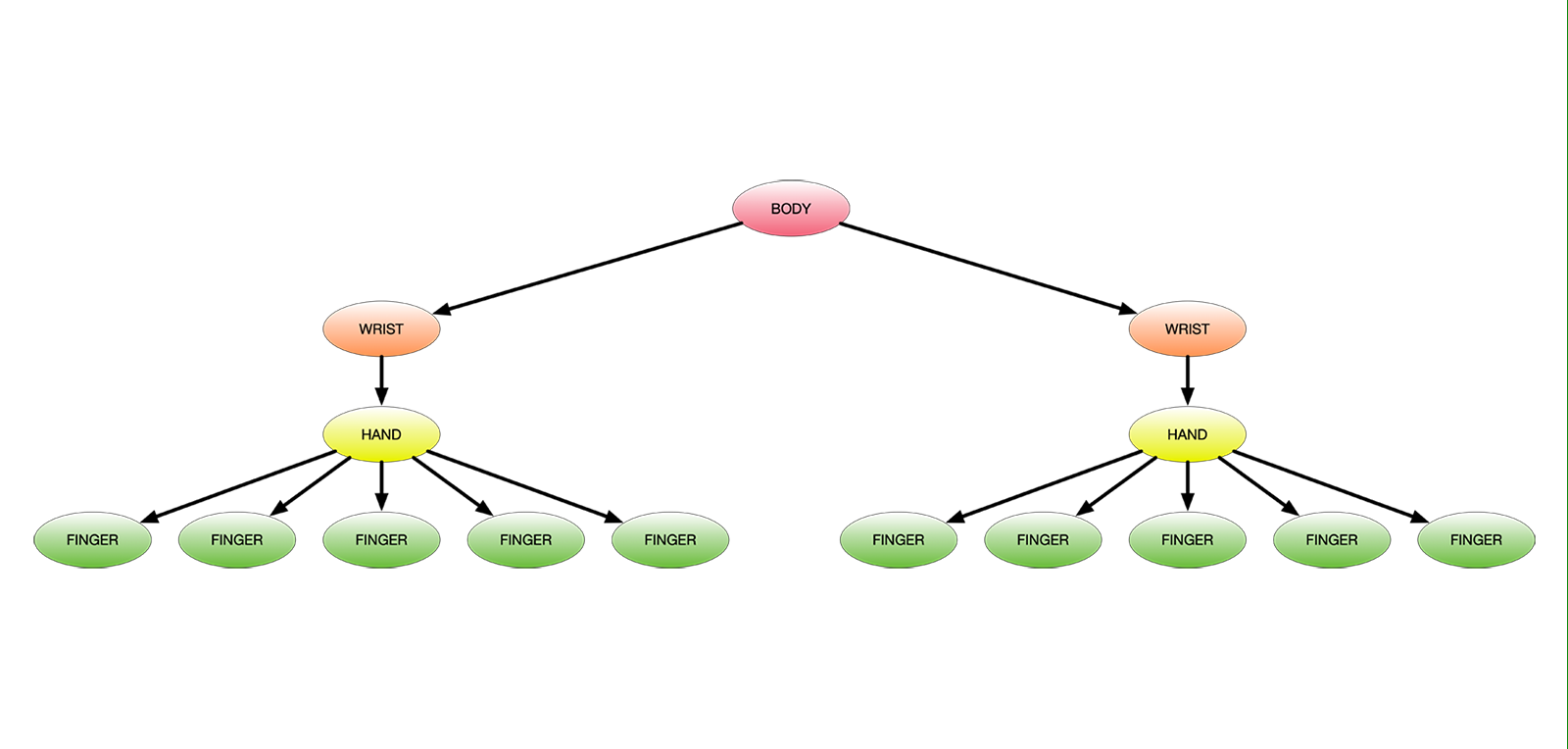

Users can also edit retrospectively, before the recording was made. The microphones can be changed and moved, the instruments substituted or moved to a different room, the cymbal nuts tightened. Likewise, the nature of the performance can be altered—as an improved version of the original, or influenced by the style of a specific musician. The timing of notes is based on their perceptual onset, instead of MIDI’s traditional (and late) reference to note energy.

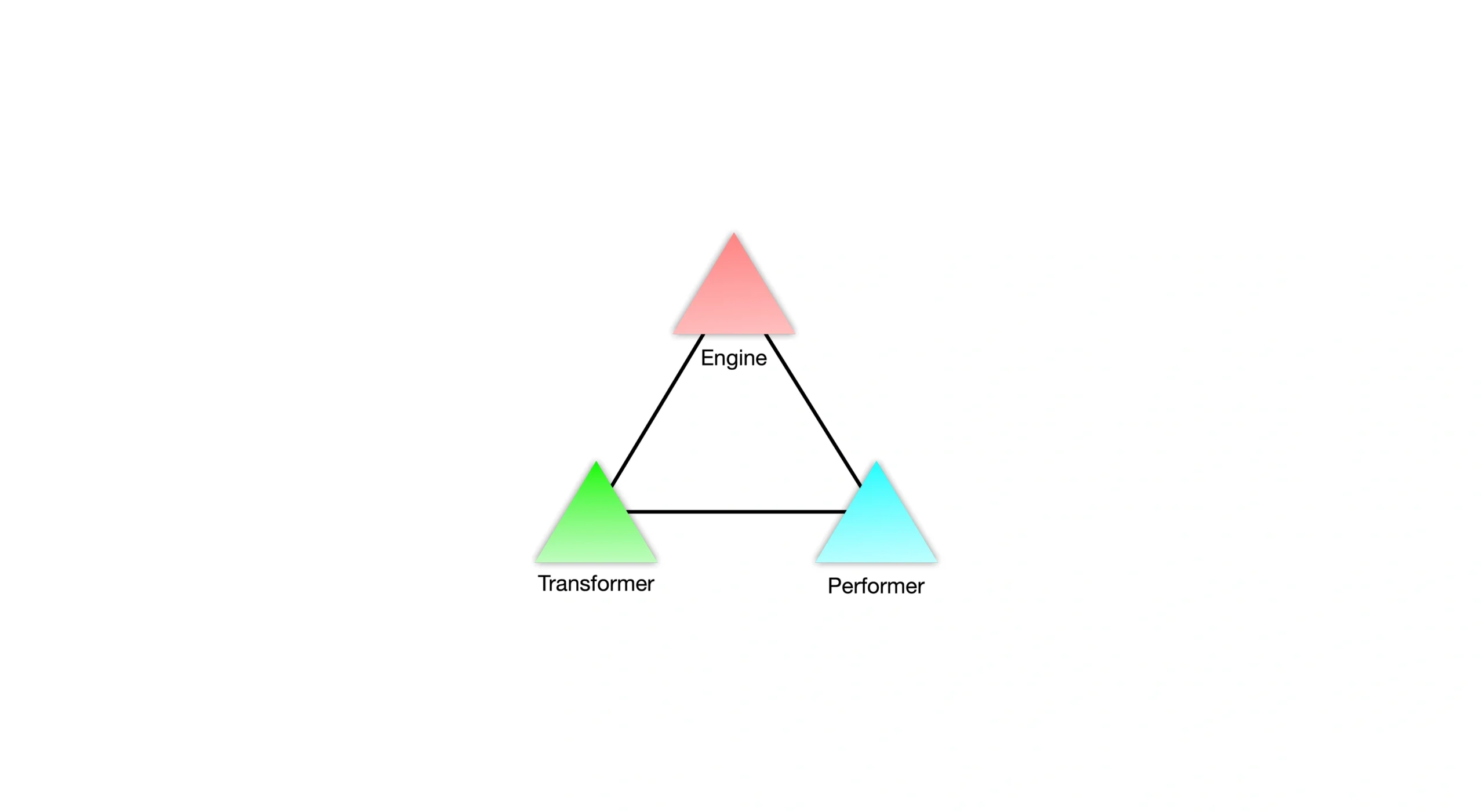

Architecture

PRISM’s deep integration requires no changes to the DAW. It creates an internal version of its advanced data structures for its own use, and serves a translated subset to the DAW, granulated according to the host. Therefore, PRISM-enabled projects save with the DAWs native project format, and support interchange formats such as AAF. PRISM’s native data is saved in an independent file that accompanies the project.

Second Language

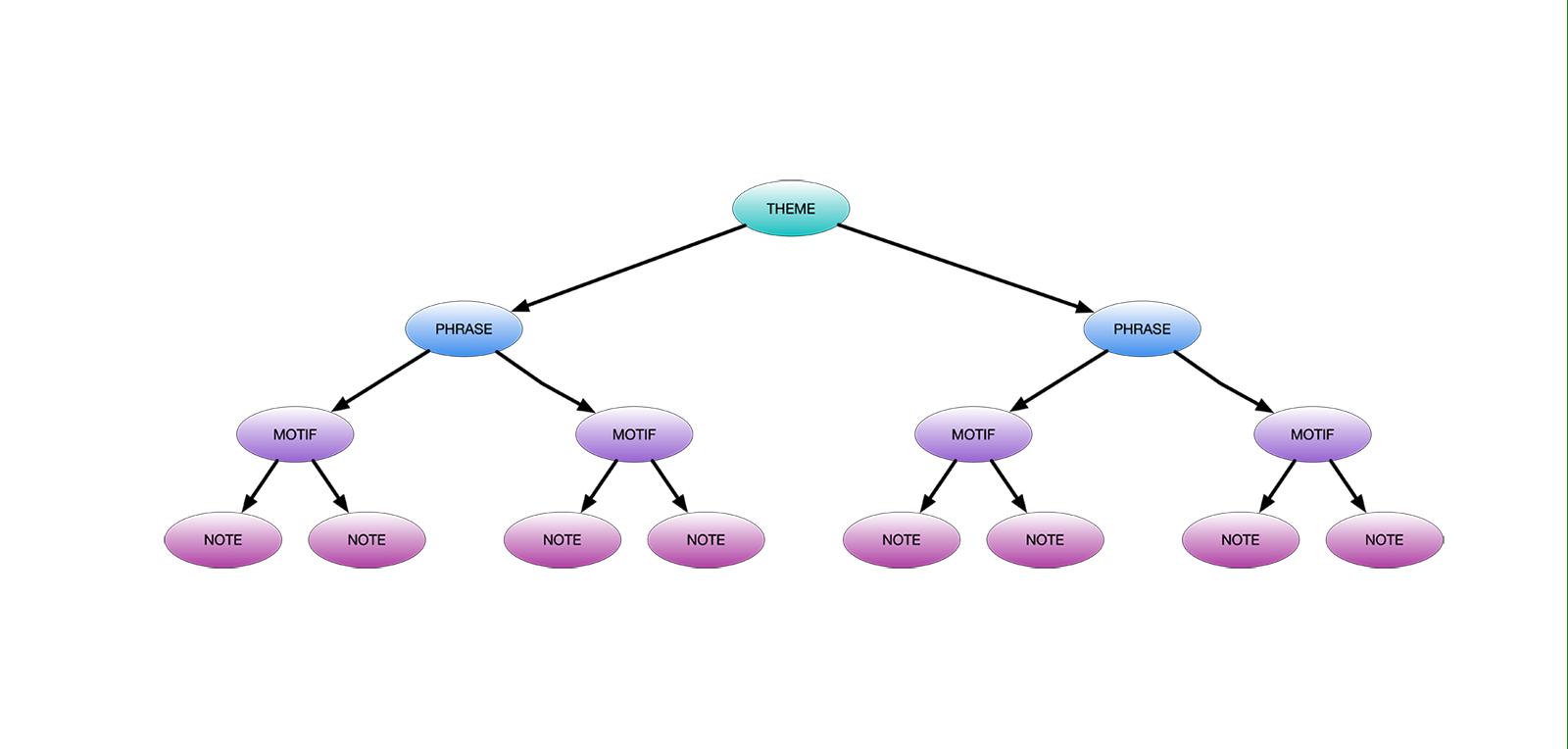

PRISM 3 leverages LLM behaviour by converting audio files to semantically-encoded lossless MIDI. In that form, a second analysis reveals hierarchical musical structure, treating music as a language (such as a context-free grammar).

Come Correct

Hierarchical structure enables a generalised understanding of our artistic intentions, and to take that next step with us. PRISM 3’s Performance Restoration repairs musical mistakes—not according to an objective measure, but within the context of the user’s own repertoire.

One for All

PRISM doesn’t just create audio—it creates precise MIDI and dynamic virtual instruments capable of resynthesising original audio tracks. PRISM 3’s Personality Profiler personalises performances and composition, allowing users to map their own composition and performance style onto existing tracks.

All for One

Or, users can apply the composition and performance styles of officially-licensed artists, mapped onto their own performances, to both MIDI and audio tracks.

You've Heard the Expression

Artificial Intelligence can generate music that is indistinguishable from humans. The human desire to create, though, is a function of our need to express ourselves. AI that creates for us can never be of us. PRISM allows us to be more expressive. At its heart, PRSIM was created to achieve the one goal shared by all artists—to be better. To become the best version of ourselves.

Evans introduced many elements of PRISM n on Flying Colors’ Live at the Z7.